StoryJam

Designing collaboration systems that make equity the default

Led 0→1 product development for Ford's first participation equity platform—eliminating anchoring bias through simultaneous reveal and proving that better decisions aren't just cultural, they're structural and measurable.

Overview

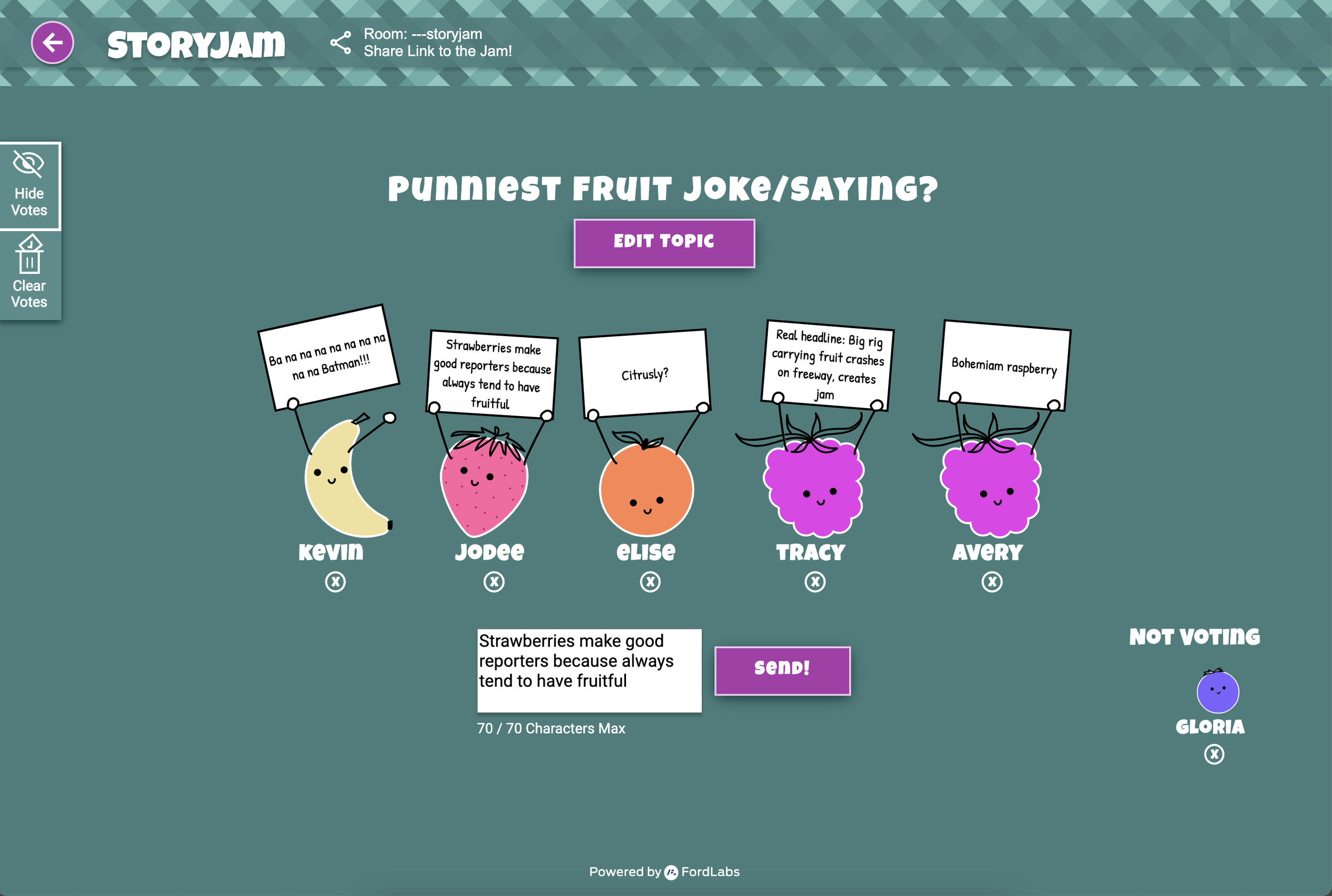

StoryJam is an internal collaboration platform that enables simultaneous, independent input across story pointing, retrospectives, and strategic planning—reducing hierarchy effects, performance pressure, and early-influence bias.

Instead of persuading teams to behave equitably, the system makes equitable participation the default through interaction design.

Real adoption proved that better decisions aren't just cultural—they're structural, interaction-level, and measurable.

The Stakes

This was my first 0→1 PM project, and credibility depended on demonstrating real behavioral and organizational value—not just excitement around a new tool.

To influence leadership, I had to:

Validate a true participation problem (not just a FordLabs preference)

Prove teams would change behavior when given the right conditions

Show measurable outcomes that justified continued investment

Success here unlocked visibility, trust, and future investments in equitable collaboration patterns across the org.

QUICK FACTS

Role: Product Manager (0→1)

Duration: 8 months (discovery → launch → iteration)

Team: 3-4 engineers, 1-2 designers

Company: Ford Motor Company

Impact: 2-3x launch engagement vs. comparable internal tools; 612 monthly users; 4.3/5 satisfaction

Key Innovation: Simultaneous reveal as core interaction pattern preventing anchoring bias

My Role

Product Manager | 0→1 Product Development

Led strategy, discovery, and delivery for Ford's first participation equity platform—eliminating anchoring bias through simultaneous reveal.

What I Did

Product Strategy & Research

Positioned StoryJam as participation equity platform (not story-pointing tool).

Conducted 9 user interviews and observed 13 team meetings to uncover how anchoring, hierarchy, and facilitation styles shaped decision outcomes.

Used opportunity mapping to navigate scope expansion without diluting core value.

Analytics & Decision-Making

Designed instrumentation from day one.

Ran org's first A/B test achieving 3.5× industry CTR benchmarks.

Prioritized facilitator neutrality, dynamic participation, and multi-round flexibility based on research insights and engineering tradeoffs.

Accessibility & Stakeholder Leadership

Embedded WCAG 2.1 AA criteria into every user story as non-negotiable product standard.

Socialized research insights with engineering leadership to build urgency around equitable participation.

Why This Project Mattered to Me

This was my first 0→1 PM project—my opportunity to prove I could lead a product from concept to launch while balancing user needs with organizational objectives. I wanted to apply experiments and analytics to guide iteration, practice structured prioritization frameworks, and demonstrate that accessibility expertise drives product quality, not just compliance.

Strategic Context

This was my first 0→1 PM project, and it presented interdependent goals that shaped every decision.

The Dual Challenge

Goal 1: Solve Real User Need

FordLabs teams struggled with remote voting and decision-making. Using Slack and Webex, votes became visible sequentially—early opinions influenced later ones, creating anchoring bias. The same voices dominated while quieter perspectives stayed silent.

Goal 2: Create Cultural Showcase

Leadership wanted to demonstrate equitable participation at scale and influence Ford's broader collaboration culture. This required a solution compelling enough to drive voluntary adoption across teams.

The Critical Dependency

The cultural showcase could only succeed if we first validated genuine user value.

Leadership wouldn't invest in scaling a showcase without proof that teams actually needed this—and would use it. Goal 1 was the prerequisite for Goal 2.

This dependency shaped our entire product strategy: validate the problem broadly, build for real use cases, measure behavioral change, then scale the story.

The Problem

Teams Couldn't Get Equitable Participation in Remote Decisions

Using Slack and Webex for voting, teams faced consistent participation imbalances:

Sequential reveal created anchoring bias: Votes became visible as people submitted them

Early opinions influenced later ones: Some voted immediately while others recalibrated after seeing what others thought

Hierarchy effects amplified: Senior voices carried disproportionate weight

Quieter voices stayed silent: People held back rather than risk disagreeing with early consensus

The result: decisions driven by who spoke first, not by collective intelligence.

What We Needed to Validate

The create-a-thon prototype demonstrated that simultaneous reveal could work for story pointing, but we needed to answer critical questions before building:

Riskiest Assumptions:

Does this problem exist beyond FordLabs' story pointing sessions? (If isolated to our team, we'd build something nobody else needed)

Will teams actually change their behavior if given better tools? (Or is this a cultural issue tools can't solve?)

What features make this usable for real teams? (Prototype worked in controlled demo; real meetings are messy)

If we couldn't validate these assumptions, both goals would fail before we wrote a line of production code.

Discovery

Research Approach

We prioritized testing our riskiest assumption first: Does this problem exist broadly enough to matter?

Research Methods:

Industry Analysis: Researched collaboration patterns and existing tools in the market

9 User Interviews: Understood pain points, current workflows, and team dynamics

13 Contextual Observations: Observed team meetings across Ford to identify real-world participation patterns

Affinity Mapping: Grouped findings to identify common themes

Journey Mapping: Visualized current workflows to pinpoint friction areas and opportunities

Mixed methods revealed the problem extended far beyond what we initially scoped—and that insight transformed our product direction.

Key Research Insights

We set out to improve remote story pointing, but the research revealed a broader collaboration challenge. Four critical insights emerged that directly shaped product strategy:

Story Pointing Was Declining, But the Problem Wasn't

Finding: Story pointing usage was declining at Ford, but participation imbalances persisted across every team ritual.

Across retrospectives, brainstorms, strategy sessions, and ice-breakers, the same pattern surfaced: a few voices dominated while others pulled back.

Insight: The issue wasn't the ceremony—it was the social dynamics that exist in any group decision-making context.

Product Implication: Solve the root problem (participation equity) rather than optimize for one declining use case (story pointing).

Equity Enables Better Decisions, Not Just More Engagement

Finding: When diverse perspectives surfaced independently, teams made stronger decisions with real alignment.

Insight: Facilitators didn't need more "engagement"—they needed clean, diverse signals to reach genuine consensus. Simultaneous reveal wasn't about politeness; it was about decision quality.

Product Implication: Position value as "better decisions" rather than "more inclusive culture" to appeal to outcome-focused stakeholders.

Making Facilitators' Jobs Harder Drives Churn

Finding: We'd overlooked facilitator needs in the prototype. They needed to edit topics, manage participants, run multiple rounds, and guide without voting—all while keeping sessions moving.

Insight: Even with strong participant value, facilitator friction would drive tool abandonment. Facilitators are gatekeepers—if they don't adopt, neither will teams.

Product Implication: Prioritize facilitator experience alongside participant experience. Build features that make facilitation easier, not harder.

Tools Must Adapt to Meeting Reality, Not the Other Way Around

Finding: Real meetings are fluid and imperfect. People join late, drop off, multitask, switch contexts—and most decisions unfold across multiple rounds, not one perfect session.

Insight: Tools that demand perfect conditions don't fit naturally into team rituals. Real adoption requires flexibility that accommodates messy reality.

Product Implication: Build for recurring use and session flexibility, not just one-time perfect meetings.

Strategic Pivot

Initial scope

Story pointing tool for agile teams

New Direction

Expand to "participation equity platform"

The Decision Point

Initial Scope: Story pointing tool for agile teams

New Direction: Participation equity platform supporting multiple ceremonies

This created a classic product tradeoff:

Stay Narrow: Customize deeply for story pointing → risk building for declining use case

Go Broad: Support all ceremonies → risk trying to serve everyone and satisfying no one

The Decision Framework

Our research validated four critical questions that made the decision clear:

✅ Should we solve it? Enabling authentic, diverse perspectives to drive better decisions was strategically important for Ford's collaboration culture

✅ Is there a need? Teams struggled with participation imbalance, but existing tools weren't solving it

✅ Does our approach work? Simultaneous reveal effectively reduced anchoring bias

✅ Is the problem widespread? Story pointing was declining, but participation imbalance appeared across all team rituals

The evidence supported expanding to a "participation equity platform."

Why This Served Both Goals

Goal 1 (User Need): Solve the root problem across multiple use cases = more value for teams, higher adoption

Goal 2 (Cultural Showcase): Systemic solution with broader impact = more compelling narrative for leadership

The pivot wasn't scope creep—it was strategic focus on the real problem our research had validated.

Managing Scope

The Challenge

Expand a demo into a scalable participation-equity platform—without delaying launch or over-customizing for edge cases.

Context:

Needed to rebuild prototype for long-term scalability

Had to support multiple ceremony types with minimal variation

Every new feature carried high engineering and timeline cost

Team was small (3-4 engineers, 1-2 designers)

Product Prioritization Strategy

I used three principles to manage scope:

One

Core Mechanic

Decision:

Use a single simultaneous-reveal interaction to support all ceremonies without customization.

Rationale:

Rather than build ceremony-specific features (story pointing vs. retrospective vs. planning), use one flexible mechanic that works everywhere.

Tradeoff:

Less ceremony-specific optimization, but dramatically faster to build and easier to maintain.

Result:

Launched on time with broad applicability.

MVP

Feature Discipline

Decision:

Limited scope to core meeting flows; deferred ceremony-specific features.

What We Cut:

- Custom templates for different ceremonies

- Advanced facilitation features (timers, ice-breakers)

- Integration with other Ford tools

- Detailed analytics dashboards

What We Kept:

- Simultaneous reveal (core value)

- Basic room management

- Facilitator controls

- Multi-round support

Rationale:

Ship early to validate adoption, then prioritize only high-value additions based on usage data.

Result:

8-month timeline from discovery to launch.

Data-Driven Roadmap

Decision:

Release early to gather usage data and prioritize only validated needs.

Approach:

- Instrumented analytics from day one

- Tracked voting patterns, room usage, session types

- Used Mouseflow surveys for qualitative feedback

- Let real usage patterns inform roadmap

Rationale:

Don't guess at features—build what users actually need, proven through data.

Result:

Clear prioritization based on evidence, not opinions.

Strategic Feature Decisions

Three research-driven features I prioritized with the team, showing the product thinking behind each decision:

Facilitator Neutrality

Research Insight: Journey mapping revealed we'd overlooked facilitator needs. They guide sessions but shouldn't influence votes—yet existing tools forced them to participate or abstain entirely, both creating awkwardness.

Product Decision: I prioritized role-based functionality with separate voting/non-voting modes.

Engineering Tradeoff: Added complexity to user model and permissions system, requiring additional development time. But this enabled cultural influencers (team leads, managers) to champion the tool without compromising equity.

Why It Mattered: Unlocked adoption among key stakeholders who could drive org-wide usage. Without neutral facilitation, managers would avoid the tool to prevent hierarchy effects.

Result: Facilitators could guide naturally while preserving participation equity.

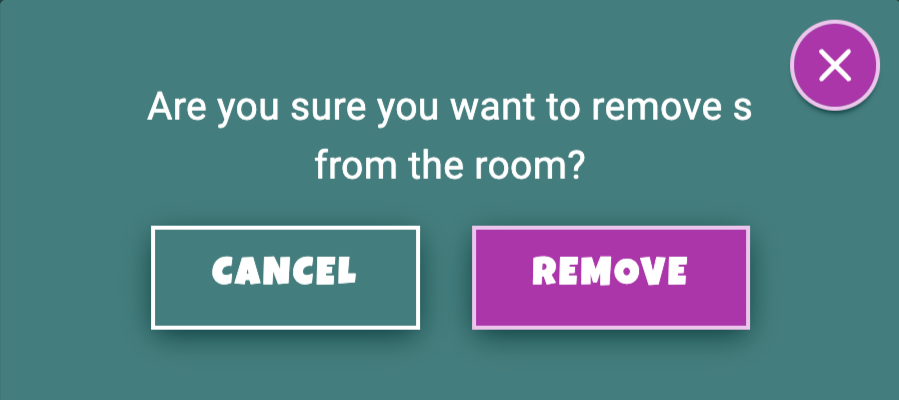

Dynamic Participation

Research Insight: Real meetings are fluid—people join late, drop off, multitask. Our prototype couldn't handle this reality, forcing facilitators to restart sessions when participants changed.

Product Decision: I prioritized participant management based on observation data showing teams needed to:

Remove participants mid-session (when someone drops off)

Clear votes between rounds (for multi-round discussions)

Clear entire session room (to improve return visit experience)

Support both single sessions and recurring rituals

Engineering Tradeoff: Required state management complexity and real-time updates. Team debated whether this was "must-have" or "nice-to-have"—I argued it was foundational to real-world usability.

Why It Mattered: Tool fit naturally into real meeting dynamics instead of forcing teams to change behavior. Session clearing reduced friction for ongoing adoption—teams could reuse the same room weekly without setup overhead.

Result: Supported messy real-world meetings, not just perfect demo scenarios.

Multi-Round Flexibility

Research Insight: Teams needed multiple rounds for both single sessions (iterative discussions) and recurring rituals (weekly retros), but prototype made subsequent rounds painful—everything had to be manually reset.

Product Decision: I prioritized flexible session management to support diverse ceremony types without ceremony-specific customization.

What We Built: Design and engineering created independent controls for:

Clearing votes without clearing topics (for re-voting on same items)

Clearing topics without clearing room (for new discussions in same session)

Preserving session state for recurring meetings (room stays configured)

Engineering Tradeoff: Added UI complexity and edge cases. Could have shipped faster with single-round-only support.

Why It Mattered: Single feature supported story pointing (multiple rounds in one session), retrospectives (recurring weekly meetings), and strategy planning (iterative discussions)—without requiring separate ceremony templates.

Result: One tool serving multiple use cases, validating our "participation equity platform" positioning.

Accessibility First

Approach: I embedded WCAG 2.1 Level AA conformance requirements in every user story from day one.

Why This Mattered: This was my first PM project where I leveraged my accessibility expertise proactively instead of retrofitting later. We started with:

Screen reader compatibility

Keyboard navigation focus management

Color contrast meeting AA standards

Clear focus indicators for interactive elements

Product Philosophy: Ensuring the "equity by default" promise extended to users with disabilities—not an afterthought for compliance, but a core product value.

Result: Accessibility became a non-negotiable product standard embedded in team culture.

Measurement Strategy

Instrumented from Day One

I designed analytics architecture from the start to track product health and business impact:

Leading Indicators (Product Health):

Vote submissions & reveals: When teams got core value (simultaneous reveal working)

Room size distribution: Should we design for larger groups?

Room name patterns: Recurring weekly meetings vs. one-time use (stickiness signal)

Session duration: Are meetings getting faster or bogged down?

Lagging Indicators (Business Impact):

Monthly active users: Adoption and retention

User satisfaction: Mouseflow surveys (ultimately 4.3/5 rating)

Promotional CTR: Launch effectiveness (A/B test results)

Philosophy: Measure what matters—not vanity metrics, but signals of real value and sustainable usage.

Analytics & Experimentation

My First A/B Test

Context: Needed to promote StoryJam's launch without appearing heavy-handed (cultural sensitivity around internal tools).

Hypothesis: Direct, action-oriented CTAs would outperform generic "try it" language.

Test Design:

Variant A: "Try StoryJam" (specific, product-named)

Variant B: "Try it Now" (generic, action-focused)

Measured: Click-through rate (CTR)

Results:

Variant CTR vs. Industry Benchmark

"Try StoryJam" 2.00% 3.5x better "Try it Now" 1.36% 2.4x better

Both variants exceeded industry benchmarks by 2-3x

Decision: Rolled out "Try StoryJam" as primary CTA.

Learning: Specific, named CTAs built clarity and confidence. Even our "weaker" variant massively outperformed external benchmarks—signal that the underlying value proposition resonated.

Impact & Results

Quantified Outcomes

Launch Engagement

Compared to similar internal tool launches.

Monthly Users

Strong user satisfaction via Mouseflow surveys.

Satisfaction Rating

Strong user satisfaction via Mouseflow surveys.

Qualitative Impact

"I love StoryJam! The quality of conversation is improving as a result of getting everyone's opinions out."

— Manager

"It has changed the dynamic of our team story pointing sessions. I used to be the main person to talk and give my opinion and now more people on the team are speaking up"

— Senior Software Engineer

What This Proved

✅ Teams would change behavior when given the right conditions (not just cultural issue)

✅ Simultaneous reveal effectively reduced anchoring bias (validated hypothesis)

✅ Problem extended beyond story pointing (participation equity mattered broadly)

✅ Measurable outcomes justified continued investment (credibility for future work)

Key Learnings

1. Don't Assume the Problem

What Happened: Many teams had abandoned story pointing, but we initially scoped the solution narrowly around that ceremony.

Learning: By not assuming, we discovered simultaneous reveal solved participation equity across multiple meeting types—much greater impact potential.

Application: Always validate problem scope before locking in solution scope. The real problem is often broader—or different—than initial signals suggest.

2. Surface Problems Hide Systemic Issues

What Happened: What started as "remote story pointing tool" revealed deeper participation patterns preventing teams from accessing collective intelligence.

Learning: User complaints often point to symptoms, not root causes. Deep discovery reveals the systemic issues worth solving.

Application: Invest in discovery that goes beyond surface pain points. The most valuable products solve problems users don't yet articulate clearly.

3. Experiments Build Confidence

What Happened: Running A/B tests achieving 3.5× industry benchmarks and tracking value indicators gave concrete evidence our strategy worked.

Learning: Data transforms opinions into decisions. Quantified results built stakeholder confidence and justified continued investment.

Application: Design measurement into products from day one. "We think this works" is weak; "This performs 3× better than benchmarks" is compelling.

4. Research Artifacts Drive Prioritization

What Happened: Journey maps and opportunity solution trees made abstract problems concrete, revealed gaps we'd missed (like facilitator needs), and aligned the team around priorities.

Learning: Structured research artifacts create shared understanding and make prioritization decisions less subjective.

Application: Invest in synthesis artifacts that can be referenced throughout development. They pay dividends when making tradeoff decisions.

5. Accessibility Is Strategic Advantage

What Happened: Embedding WCAG 2.1 AA from the start made accessibility a team habit, not a retrofit burden.

Learning: Accessibility done well is invisible to most users—but critical for some, and beneficial for everyone (clearer focus states, better keyboard navigation).

Application: Position accessibility as product quality, not compliance checkbox. It's a competitive advantage when done proactively.

What I'd Do Differently

If I were starting StoryJam again with the insights I have now:

1. Involve Facilitators in Earlier Prototype Testing

Why: We discovered facilitator needs late, after the prototype was built. Earlier inclusion would have surfaced these requirements sooner and prevented rework.

Impact: Faster iteration, better initial design, stronger facilitator buy-in from the start.

2. Create Clearer Decision Gates for Scope Expansion

Why: The pivot from story pointing → participation equity was right, but we didn't have explicit criteria for when to expand scope vs. stay focused.

What I'd Define Upfront:

What evidence would justify scope expansion?

What thresholds would trigger scope reduction?

How do we distinguish "valuable addition" from "scope creep"?

Impact: More confident, faster decisions with less team debate.

3. Build Ceremony-Specific Templates Sooner

Why: We deferred templates to stay lean, but usage data showed teams wanted light ceremony customization (e.g., retro themes, planning frameworks).

What I'd Do: Include 2-3 basic templates in MVP based on most common ceremonies observed in research.

Impact: Faster adoption, less manual setup overhead for teams.

4. Start Analytics Instrumentation Even Earlier

Why: We instrumented at launch, but missed opportunity to track prototype usage patterns during early testing.

What I'd Change: Add lightweight analytics to prototype phase to identify which features drove value vs. which were ignored.

Impact: More evidence-based prioritization from the beginning.

5. Create Stakeholder Communication Cadence Upfront

Why: We socialized findings ad-hoc. More structured communication (bi-weekly updates, milestone reviews) would have built broader awareness and support earlier.

Impact: Stronger stakeholder alignment, easier to mobilize resources when needed.

Final Reflection

StoryJam proved that better decisions aren't just cultural—they're structural, interaction-level, and measurable.

By designing systems that make equity the default (rather than persuading people to behave equitably), we created lasting behavioral change.

This project taught me that the most impactful products don't just optimize existing behaviors—they reshape the conditions under which better behaviors naturally emerge.